We are here for you

Our in-house Customer Care experts are only a click away

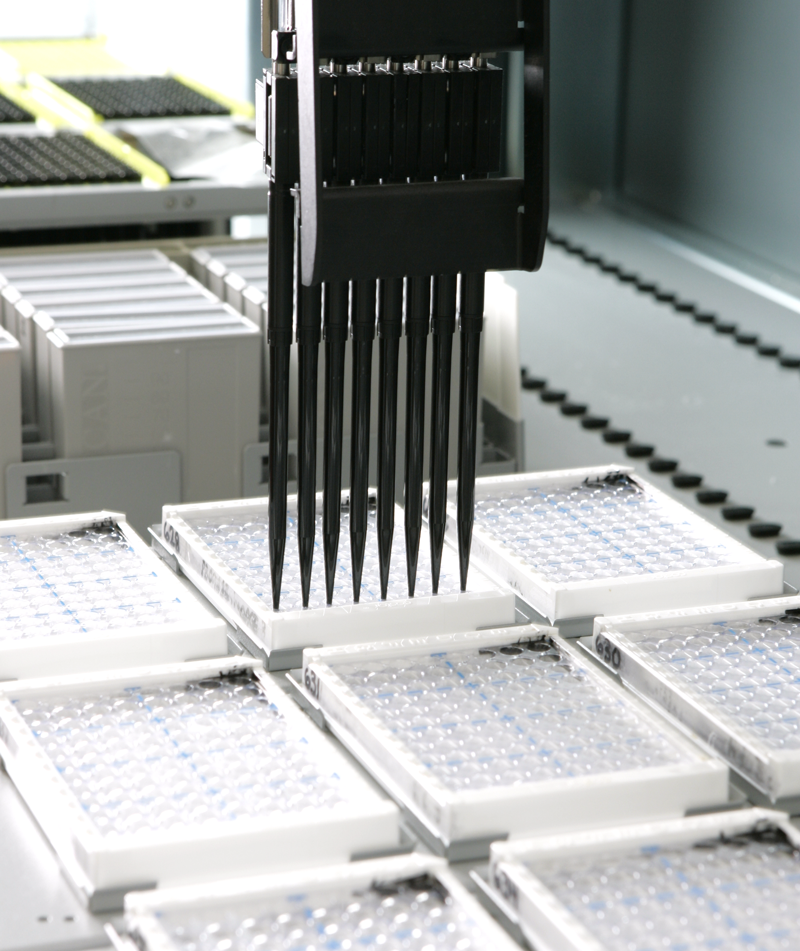

Our core belief is that no single technology will answer all diagnostic research questions that we encounter. We employ genomic, proteomic, and AI/Machine Learning technologies to uncover valuable biological information about the patient’s disease.

We are a market leader in the field of clinical proteomics and we have unparalleled expertise with several tools that give our partners a closer look at disease biology. Our technologies include:

-edit.jpg?width=786&height=433&name=Copy-of-ct_ddpcr-19apr2020-(1)-edit.jpg)

We use highly sensitive technologies to provide comprehensive genomic diagnostic insights.

Our extensively validated deep learning platform is optimized for the discovery of clinical diagnostic tests from multiple technologies, including genomics, transcriptomics, proteomics, and radiomics.